Before you read through this tutorial, I’m assuming you’ve already linked up your iPhone Live Link app to Unreal and had a bit of a play with it. If not, then I’d suggest going through my Set Up LiveLink with your Metahuman tutorial first to get it working.

Also I am researching all things Metahumans for a speech therapy app, so if you want to link up with your own characters, you’d be better off reading through the Unreal Engine Official Tutorial.

So from here then you have LiveLink set up and when you press Play > Simulate the metahuman is copying your facial poses.

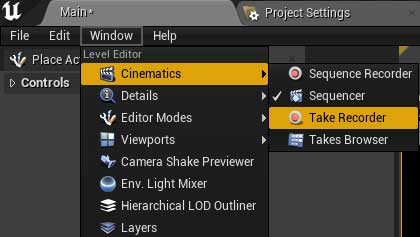

So to record you need to open up Take Recorder, which is Window > Cinematics > Take Recorder

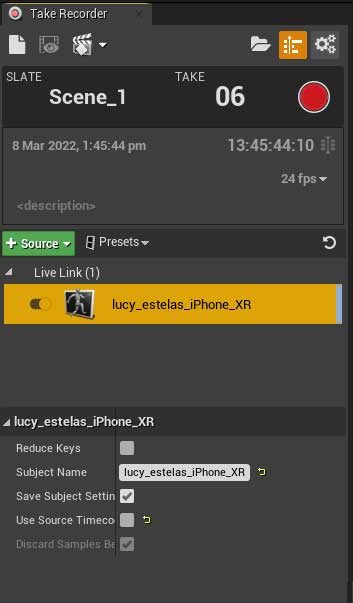

It will open up in a little window next to your view port. Add your phone as a source by clicking the green +source button > From LinkLink > choose your phone. I’m not recording audio for these as I’m just doing facial animations, but I think you can add an audio source here too, like +source > microphone audio, but maybe do a quick google to confirm.

Click on your phone to bring up extra options and deselect Use Source Timeco

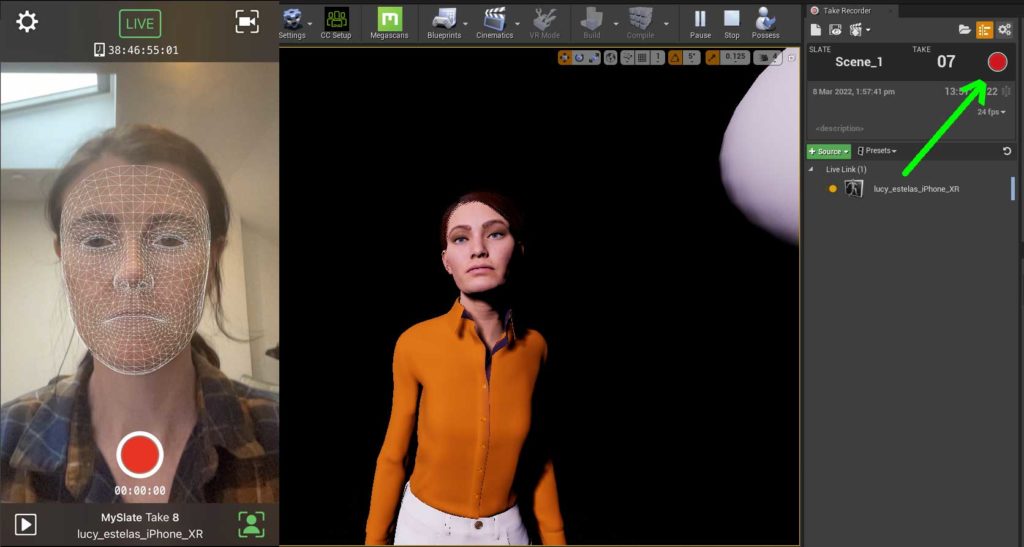

Start up LiveLink on your phone and press the Unreal Play > Simulate option to see your metahuman respond to your input. When you’re ready just press the red button on the Take Recorder panel. It will give you a 3 second countdown.

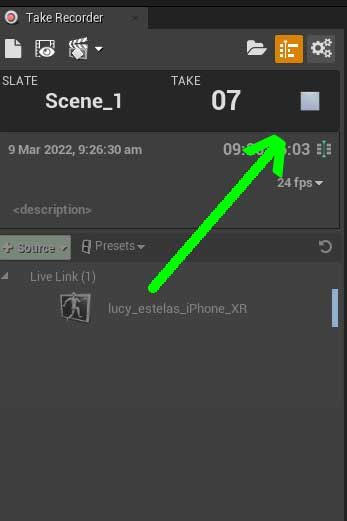

When you’re finished just hit the stop button.

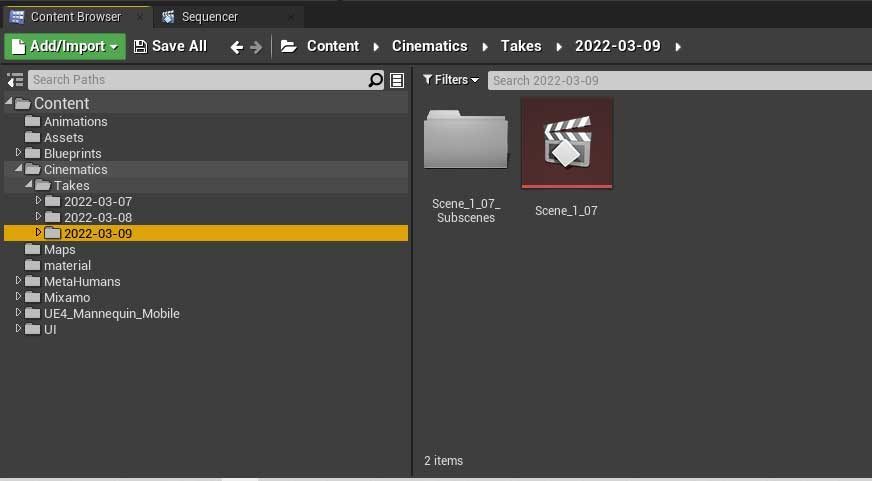

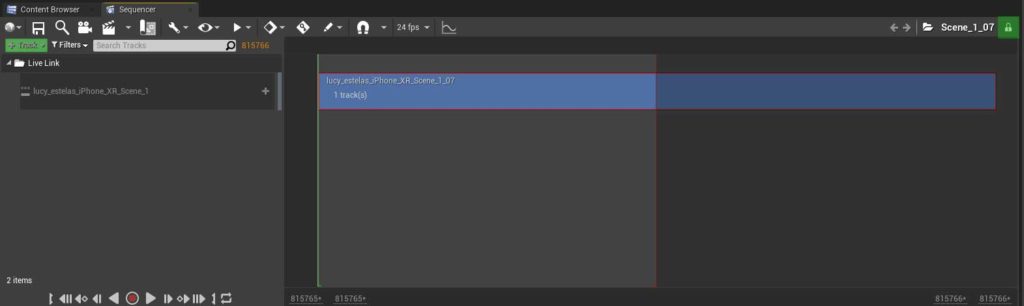

The recording will be saved as a level sequence in a cinematics > Takes > date folder. Open it up and if you are still in play mode you can scrub back and forth to see your animation playback on your metahuman

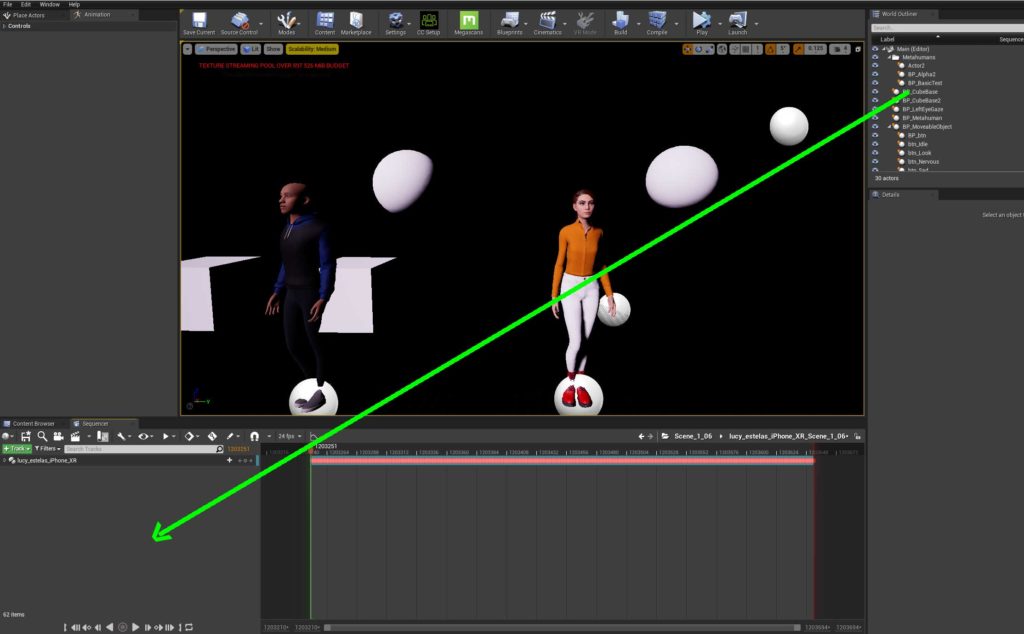

Okay so now we have an animation, but I want to save it in a way that I can apply it to my metahuman when I press a button. I’ll be creating a lot of these facial animations and will call them all in a state machine for the metahuman facial blueprint. So my next step is to save this animation out. First unlock the sequence by clicking the padlock in the top right corner. And double click on the blue track to open it.

Drag your metahuman from your World Outliner into the timeline of the Level Sequence

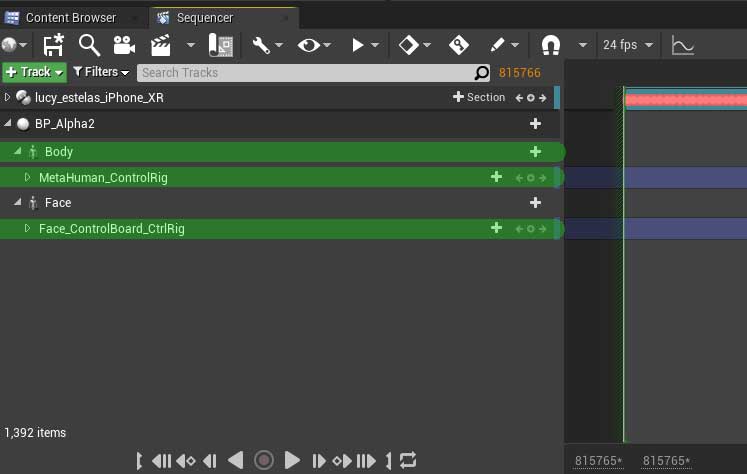

There are a couple of things that we don’t need, so delete out the Body, MetaHuman_ControlRig and the Face_ControlBoard_CtrlRig

Save the animation by right clicking on the Face track and choosing Bake Animation Sequence > name it and define where you want to save > OK > Export To Animation Sequence

You can open the animation and see it playing back on your metahuman head

Now I have 3 animations saved out. Idle, nervous and sad and I want my metahuman to play them based on the button I press in the control panel in my Hololens 2 app. I already have my Mixamo body animations playing, and now I want facial animations to go along with them.

For playback on your metahuman, you’ll be working with the Face_AnimBP. Search for it in your Content Browser and open it.

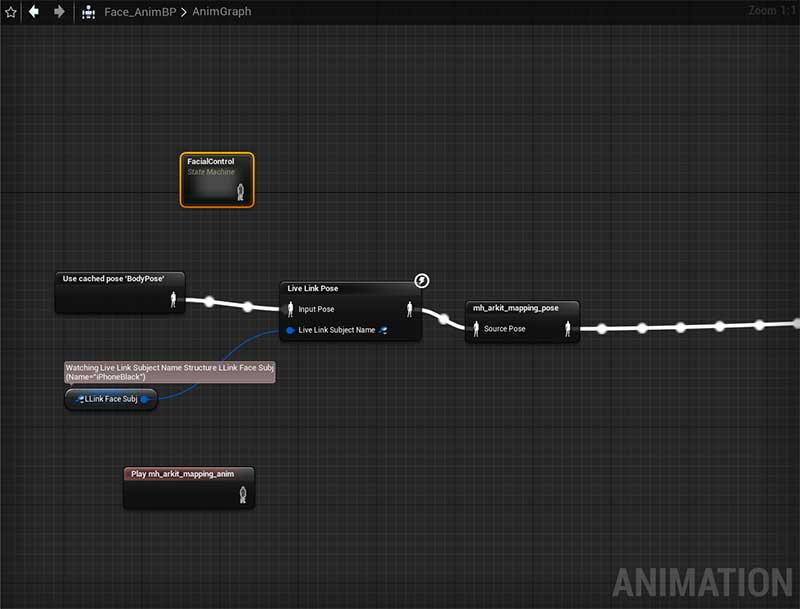

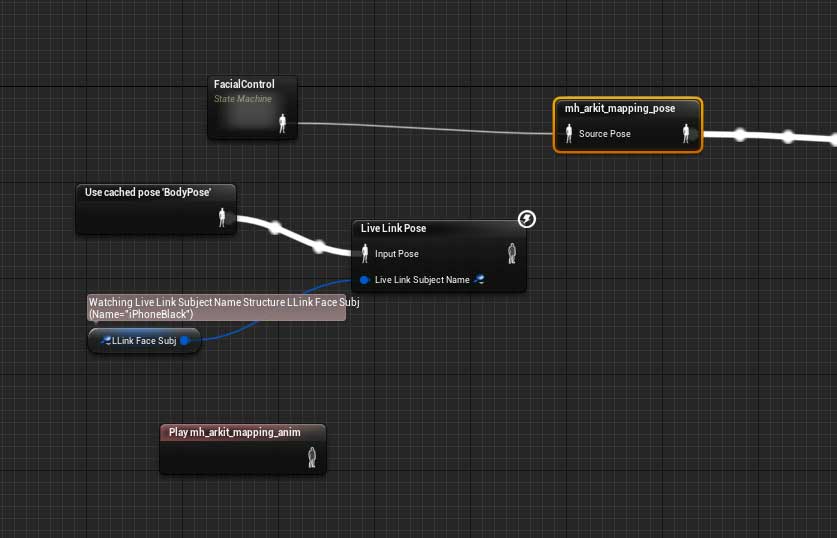

Open up the AnimGraph and you’ll see that LiveLink is already set up and feeding data to your metahuman. Great, but we want to feed it our animations so we need to create a new State Machine. So right click and create a State Machine node. I’ve called it FacialControl but you can name it whatever you like. Double click on it to open it and set it up.

I’m going to assume here that you know all about State Machines, how they work, how they are set up. If not then have a quick google for a tutorial on them. But I’ve just set mine up with a simple idle, upset and nervous state.

Once you’ve set up your State Machine, link it into your Metahuman by replacing the LinkLink connection with your StateMachine

From here you’ll create your own process for controlling the State Machine and no doubt it will be better and cleaner than my setup.

But just a quick run down, here is how I’m calling them in my Hololens 2 app.

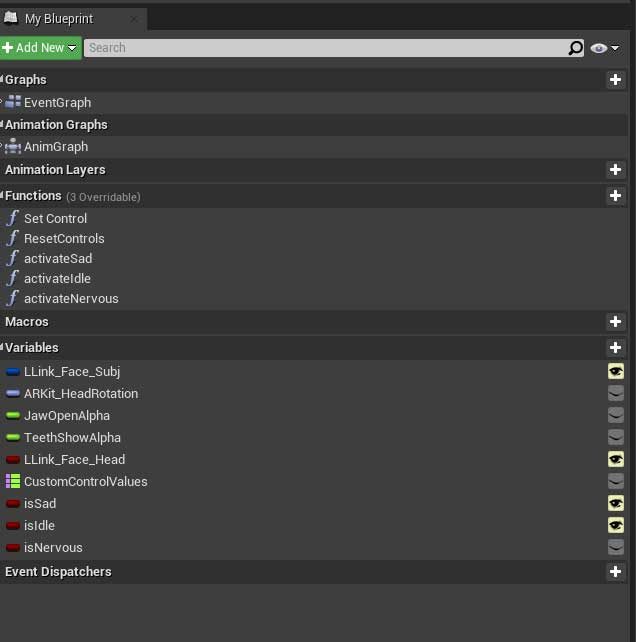

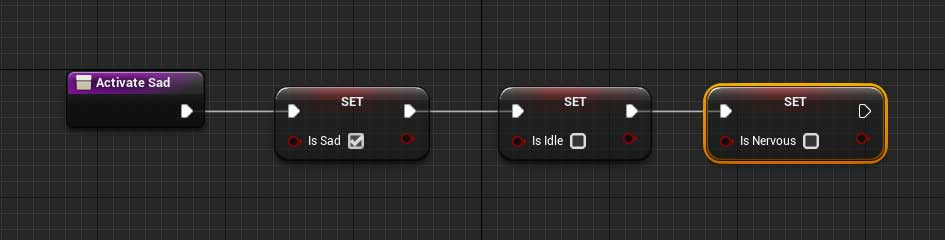

I’ve also set up 3 booleans isSad, isIdle and isNervous that I control through the three functions activateSad, activateIdle and activateNervous.

Those three functions are super simple just setting the boolean value of the 3 variables. The State Machine that I set up reads these variables and decides when to play the various animations.

I have a control panel in my Hololens 2 app that already talks to my ABP_Metahuman (animation blueprint) linked to the metahuman_base_skeleton and tells it which body animations to activate.

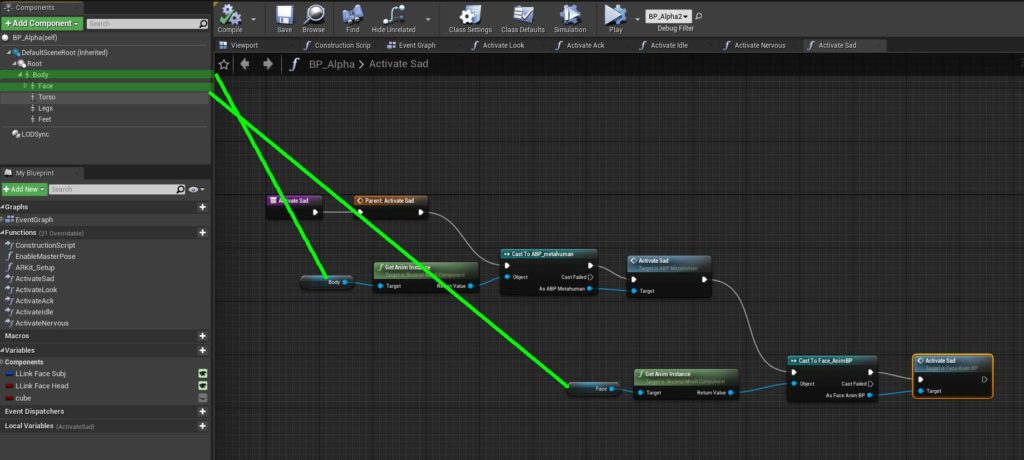

So in my Metahuman (called Alpha) blueprint I have the functions set up to talk to both the skeleton animation blueprint (ABP_Metahuman) and the facial animation blueprint (Face_AnimBP)

So when I run it and press various buttons, the whole Metahuman updates with overly exaggerated emotions.